The Step-by-Step Guide to Agent Scorecards

by Gabriel De Guzman | Published On July 23, 2025

![GettyImages-1352042762 [Converted]](https://computer-talk.com/images/default-source/blogs/2025/gettyimages-1352042762-converted.png?sfvrsn=2b96030c_1)

For something that plays such a massive role in how customer service teams operate, agent scorecards rarely get the spotlight they deserve.

For something that plays such a massive role in how customer service teams operate, agent scorecards rarely get the spotlight they deserve.

Most agents and managers have seen a scorecard. Or even filled one out. But many still don’t understand what they’re for, how they’re built, or why they’re so important. Some still see scorecards as a checklist for quality assurance. Others think of them as performance reports. A few even think they’re just to keep people in line.

But when they’re created and used well, agent scorecards are some of the most practical, insightful tools a support team can have. They give managers visibility, agents clarity, and customers a better shot of getting the support they deserve.

Here’s your guide to everything you need to know about agent scorecards, from why they matter (for everyone), to how you can build one that works.

What is an Agent Scorecard?

An agent scorecard is a way to measure how someone on your support team is performing. Usually, it’s a document, digital, printed, or built into your contact center software alongside reporting tools. This document keeps track of specific behaviors, metrics, and results employees achieve – just like the kind of report cards you’d get at school.

But, instead of just grading someone on outcomes (like how many tickets they closed), it also looks at how they did it: Did they follow the company policy? Are they communicating clearly? Did they make the customer feel heard? These tools are meant to do three important things:

- Standardize performance evaluation: So every agent is assessed fairly, based on the actual goals and priorities of the business and contact center.

- Surface coaching opportunities: Showing strengths and weaknesses, so managers know where their team members need the most guidance.

- Identify important insights: Telling you all about call wait times, first contact resolution rates, customer satisfaction, and even agent engagement.

Without a tool like this, it’s all too easy to focus on flashy surface metrics or overlook soft skills entirely. And that’s where a lot of support teams go sideways. Scorecards help tie frontline performance directly to quality standards and customer expectations, especially when combined with regular reviews and feedback.

The Core Components of Agent Scorecards

Not all scorecards are built the same, but here are the most common ingredients you’ll see:

1. Performance Metrics (The Numbers)

These are usually the quantitative pieces, the hard data on things like:

- CSAT (Customer Satisfaction Score): Usually based on post-interaction surveys.

- AHT (Average Handle Time): How long agents spend per interaction.

- FCR (First Call Resolution): Whether the customer’s issue was resolved without needing to follow up.

Each of these points do something slightly different: CSAT tells you how the customer felt, AHT hints at efficiency, and FCR dives into team effectiveness. Some companies will tie in additional KPIs, like net promoter score (NPS), for deeper insights into how likely a customer is to recommend their company, or customer effort scores (CES), to see how complex connecting with support really is.

2. Behavioral/Compliance Checks

This is where scorecards go beyond raw numbers and start looking at how the agent did the work.

- Did they verify the customer’s identity correctly?

- Did they use the approved script where needed?

- Was their tone professional and clear?

Some of this gets scored as yes/no, some gets a rating, and some will depend on the channel of communication (email, phone, live chat, etc.). Using conversational analysis tools and AI, some companies even dive into things like whether agents mentioned specific phrases (like “this call is being recorded”).

3. Soft Skills and Quality Signals

Now we’re in the more subjective zone. But this zone matters a lot.

- Did the agent listen actively?

- Are they showing empathy?

- Did they de-escalate appropriately when the customer was upset?

These elements are harder to score with pure numbers, but they often make or break a customer’s perception of the service. That’s why a growing number of teams (especially in high-contact industries like insurance, banking, or healthcare) are investing in tools that capture sentiment and tone with AI analytics.

Why Agent Scorecards Matter (For Everyone)

You can have the best tech stack in the world: a robust CRM, lightning-fast ticketing tools, AI-powered chatbots, the works. But if you’re not actually paying attention to how your people are performing, not just what they’re doing, but how they’re doing it, you’re missing half the picture.

That’s where agent scorecards prove their worth. They don’t just track performance. When they’re built and used right, they change it.

For Managers: You Finally Know What to Coach

If you’ve ever sat down to give an agent feedback and found yourself saying vague things like “just be more confident” or “watch your tone,” then you already know the pain of coaching without structure. A good agent scorecard gives you that structure:

- You can spot patterns. Maybe Sarah keeps missing her FCR targets, but her CSAT is sky-high. That’s a coaching moment.

- You can separate the outliers from the norm. If one agent’s AHT is way off, the scorecard helps you figure out if it’s due to tougher cases, a training gap, or something else.

- You can base feedback on actual behavior, not hearsay.

It also makes end-of-month reviews much easier. Instead of digging through old tickets or relying on memory, you’ve got a clear, documented record of performance over time.

For Agents: You Finally Know What You’re Being Measured On

Most agents aren’t asking for gold stars. They just want to know what’s expected of them, what they’re doing well, and how to improve. A transparent, well-structured agent scorecard makes that possible.

- Teams know exactly what quality assurance standards they need to follow.

- Everyone is on the same page about expectations.

- Agents receive a clear way to track progress and advocate for themselves.

This kind of clarity is huge for morale. When people know how they’re being measured, they’re less anxious. And when feedback is based on facts, it feels a lot more constructive. Scorecards can also show growth. Over time, agents can literally see how they’ve improved, and that’s motivating.

For Customers: Better Service, Less Friction

Here’s where all of this starts to really pay off. Most customers don’t care what your internal metrics look like. They care about getting their issue resolved quickly and being treated like a human being in the process. Scorecards help make that happen, by:

- Encouraging agents to stick to processes that work.

- Nudging them to use empathy and active listening more consistently.

- Reducing mistakes, missteps, and mixed messages.

Because agents start following clear guidelines, First Call Resolution (FCR) can increase – which is important. According to SQM Group, when FCR goes up, CSAT follows. A single percentage point improvement in FCR usually translates to a 1% bump in customer satisfaction.

How to Create an Effective Agent Scorecard

Building an agent scorecard sounds simple, until you sit down to do it. Then suddenly you’re staring at a blank document wondering if you need to include “email open rate” or “how upbeat someone sounds on Mondays.”

So here’s a better approach: break it down into steps, build something lean, and evolve from there.

Step 1: Pick the Right KPIs

The biggest mistake people make is usually trying to track too much, too soon. You don’t necessarily need 15 metrics. You need the right 3–5 that actually reflect what matters to your business and your customers. Here’s a solid starter list:

- CSAT: Are customers walking away satisfied?

- AHT (Average Handle Time): Is the call taking an efficient amount of time, or dragging on forever?

- FCR (First Call Resolution): Did the customer have to call back?

- Adherence to Process or Script: Are agents following the flow that’s been tested and proven to work?

- QA or Compliance Score: This one can be custom; it includes whether they followed company policy, handled the case cleanly, used the correct tools, etc.

Tailor your scorecard to your business and team goals. If you’re running a tech support team, maybe you want to track resolution accuracy. If it’s a sales team, conversion rate might make more sense than CSAT.

Step 2: Choose a Scoring Method That Makes Sense

There’s no single “best” way to score. It depends on your goals and how you plan to use the results. Here are the most common options:

- Numeric Scale (1–5, 1–10, etc.): These scales are flexible and give you clear gradients of quality, but they can get confusing if scoring criteria isn’t clear.

- Pass/Fail (Yes/No): Easy to follow and calibrate across reviewers, Pass/Fail cards are straightforward, but they don’t have any nuance or flexibility.

- Weighted Scores: These scores help you emphasize what matters most (e.g., maybe FCR is 40% of the score, empathy is 20%). But this strategy takes more setup and explanation.

What matters most? Pick something your QA team and your managers can use consistently. If your reviewers can’t agree on how to score a call, the method isn’t clear enough, or the rubric needs reworking.

Step 3: Customize by Role or Channel

Not every agent does the same job. So why would they all get the same scorecard?

Here’s what that can look like:

- Phone support might be graded on tone, call control, and de-escalation.

- Live chat could focus on grammar, speed, and multitasking ability.

- Email agents might be rated on clarity, structure, and resolution accuracy.

- Outbound sales teams might need to be measured on conversion rate, objection handling, and product knowledge.

Customize your scorecard by role or at least by channel. One-size-fits-all will only lead to frustration, especially for your top performers who feel like they’re being scored on the wrong things.

Step 4: Loop in QA and Supervisors

Don’t build your agent scorecard in a vacuum. The people doing call reviews, running coaching sessions, and actually working with the agents every day know what to include, what’s realistic, and what’s irrelevant. Bring them in early.

A great scorecard reflects reality, not what leadership wishes reality looked like.

Looping the right people in early means fewer fights later when people start pushing back on why a score was “only a 3.”

Step 5: Pilot It. Tweak It. Don’t Overthink It.

Don’t panic about trying to launch the perfect scorecard on day one.

Pick a few agents. Run a two-week test. Get feedback. Ask:

- Were the criteria clear?

- Did it feel fair?

- Were the results useful in coaching conversations?

Based on the feedback received, adjust the scorecards accordingly. Scorecards are living tools. They should grow and adapt as your team and your business evolve. You might find that something you thought mattered actually doesn’t. Or that a scorecard item is too vague. Focus on constantly fixing those issues as you discover them.

Best Practices for Using Agent Scorecards

You’ve built your agent scorecard. The metrics are clear. The scoring system makes sense. You’re off to a good start. Now you need to make sure these tools are used properly, fairly, and regularly.

Run Regular QA Reviews and Calibration Sessions

Scoring inconsistency is the fastest way to make agents tune out feedback. If one QA person gives a “4” for a great call and another gives a “2” for the same call, nobody trusts the scorecard. The solution is regular calibration.

Every couple of weeks (or monthly, if you’re tight on time), get reviewers in a room and have them score the same calls, then compare results. Where scores differ, talk through why. Was the rubric unclear? Did someone weigh tone more heavily than resolution? Find the gaps.

Use Scorecards During Coaching, Not Just for Reports

Too many teams treat scorecards like a performance surveillance tool. Something that lives in a dashboard, gets pulled up once a month, and then filed away. That’s not how it should work.

Used well, scorecards are coaching tools. They give you structure, a shared language to talk about what went well, what needs work, and how to improve.

The best managers don’t just say “your CSAT was low last week.” They sit down with the scorecard and say, “Hey, your tone was great on this call, but notice how you didn’t check for understanding before wrapping up? That might explain the low score.”

Let agents weigh in too. Maybe the system glitched. Maybe the customer was already upset before the call even started. Context matters.

Tie Scorecards to Incentives and Training

If you want agents to care about the scorecard, connect it to things they care about. That doesn’t mean turning it into a disciplinary tool. In fact, if the only time agents hear about their scorecard is when they’re in trouble, that’s a problem.

Instead:

- Use strong scores as part of eligibility for bonuses or performance-based rewards.

- Let scorecard trends guide who gets what kind of training (and when).

- Celebrate improvements, not just “top scores.” Show people that effort and growth get noticed.

Some companies even build “mini milestones” based on scorecard categories, like recognizing someone who improves their soft skills score by 20% over a quarter.

Keep It Simple and Transparent

If agents don’t understand the scorecard, how it works, what’s being scored, and why it matters, they won’t engage with it. You don’t need to dumb it down, but you do need to make it digestible.

Best practices here:

- Share the full scorecard (rubric and all) with your team.

- Explain how each section is evaluated, ideally with examples.

- Make sure agents can access their scores and comments without jumping through hoops.

Transparency builds trust, which leads to better conversations, better coaching, and better results.

Mistakes to Avoid with Agent Scorecards

Even the best agent scorecard can flop if you fall into some of the usual traps. Unfortunately, these are pretty easy to stumble into, especially when you’re building your first version of a new scorecard or trying to scale one across a larger team.

So, here’s a quick list of the big mistakes companies make, and how to sidestep them.

Trying to Track Everything at Once:

It’s tempting. You start thinking, “Well, we should probably measure empathy, adherence, call length, upsell attempts, technical accuracy, and…”

Before you know it, your scorecard has 20 different categories and nobody, not agents, not reviewers, knows where to focus.

Start lean. Focus on the 3–5 categories that really move the needle for your business. You can always layer in more complexity later, but clutter kills clarity.

Using Scorecards Only for Discipline

This is a huge one. If the only time an agent hears about their scorecard is when they’re “in trouble,” it creates a toxic relationship with the whole system.

The scorecard starts to feel like a trap, not a tool.

Make feedback regular, constructive, and two-sided. Use scorecards to celebrate progress, not just highlight mistakes. Coaching isn’t a punishment, it’s development.

Letting the Scorecard Go Stale

Businesses evolve. Processes shift. Customer expectations change. But too often, the scorecard stays frozen in time, measuring things that don’t really matter anymore.

Set a reminder to review your scorecard quarterly (or at least biannually). Check if your metrics still reflect your goals. Ask agents and QA staff for feedback. Tweak where needed.

Remember: a scorecard is not a “set-it-and-forget-it” document. It’s a living thing.

Level up Your Agent Scorecards

Agent scorecards won’t fix every coaching challenge or transform your customer experience overnight. But when used intentionally, they’re one of the most powerful tools a contact center has.

They give structure to feedback. They create transparency for agents. And they help teams get better over time.

If you’re just getting started, don’t overthink it. Start small. Measure what matters. Use the results to have real conversations. Then keep improving. Adjust as your team grows. Revisit the rubric when business goals shift. Ask for input. Treat your scorecard like a dialogue, not a diagnosis.

If you want to dive deeper into the value of data-driven call center training, read our guide here.

More from our blog

Virtual agents are quickly becoming a crucial part of the modern contact center, extending the functionality of the traditional chatbot to transform self-service.

Virtual agents are quickly becoming a crucial part of the modern contact center, extending the functionality of the traditional chatbot to transform self-service.

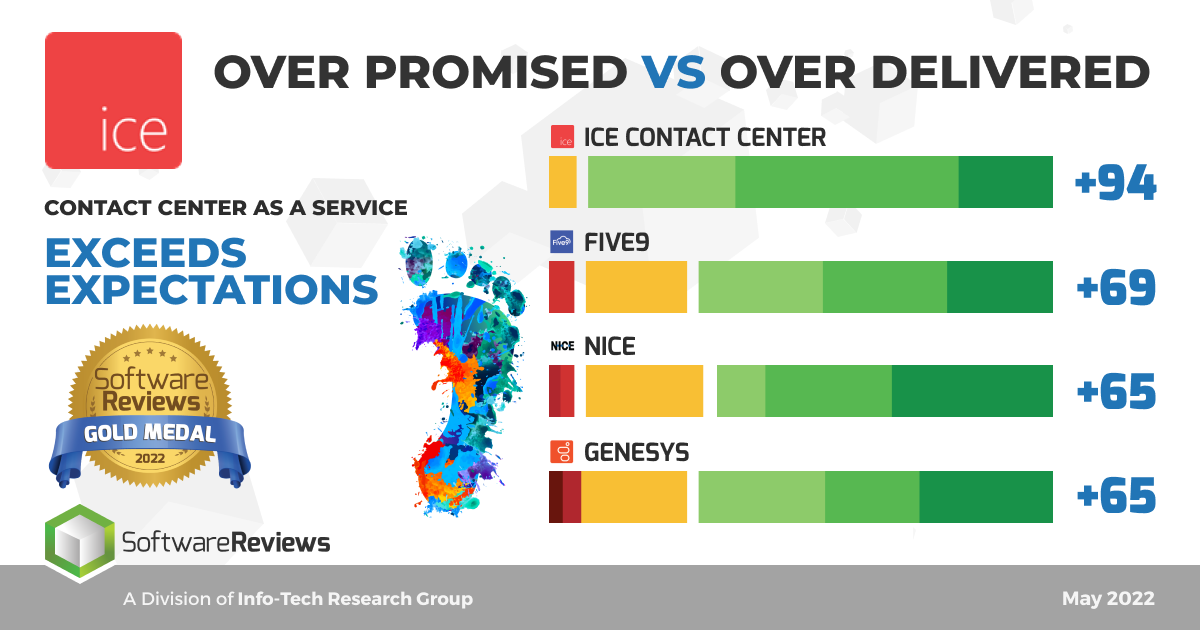

ComputerTalk is excited to announce that we’re scored a leader in exceeding client expectations in InfoTech Research Group’s SoftwareReviews.

ComputerTalk is excited to announce that we’re scored a leader in exceeding client expectations in InfoTech Research Group’s SoftwareReviews.

ComputerTalk, a CCaaS provider located in Markham, Canada, is excited to announce the upcoming release of ice 13. Following the recent release of ice 12.1, version 13 provides more enhancements to the current features and tools. Continue reading to discover...

ComputerTalk, a CCaaS provider located in Markham, Canada, is excited to announce the upcoming release of ice 13. Following the recent release of ice 12.1, version 13 provides more enhancements to the current features and tools. Continue reading to discover...